As data continues to drive key decision-making in organizations, DataOps has emerged as a methodology to glue data engineers, data scientists, and IT operations together.

In decentralized architectures like data mesh, where each domain team manages its own data products, DataOps is often seen as the operational backbone that helps these teams move fast and maintain data quality across the board.

Alas!, while DataOps promises to improve efficiency, it can also introduce its own set of challenges—especially in decentralized environments.

In this article, we will explore the role of DataOps in decentralized teams, the key use cases it supports, and how to balance the potential benefits with the complexity it can introduce. We’ll also touch on some of the tools that can help implement DataOps successfully in a decentralized architecture like data mesh.

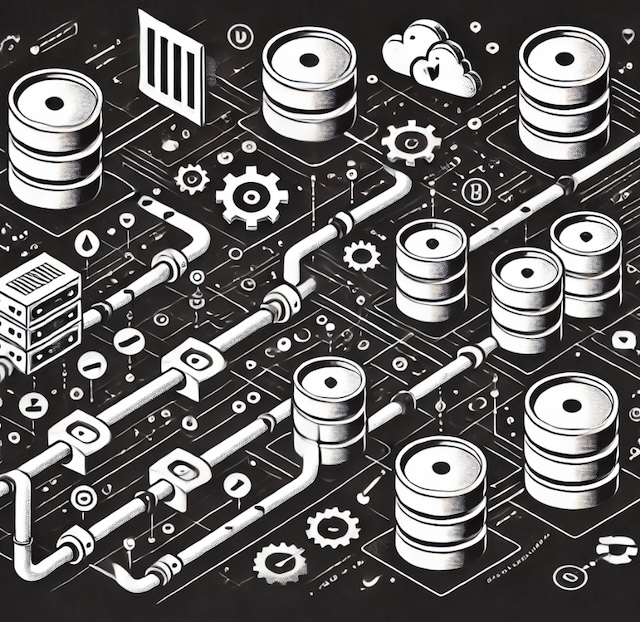

DataOps is an approach that brings DevOps-like principles to the management of data pipelines. It emphasizes automation, collaboration, and continuous delivery of data in order to improve the reliability, speed, and scalability of data workflows. In a decentralized setup like data mesh, where each team operates independently, DataOps becomes essential for enabling teams to manage their own data products effectively without creating silos or operational bottlenecks.

Yes, you can think of it as a “DevOps for data”. And the benefits?

With DataOps, teams can automate data pipeline development, deployment, and maintenance. Automation reduces the need for manual intervention, lowers the risk of human error, and enables data teams to focus on innovation rather than infrastructure management.

By implementing CI/CD (Continuous Integration/Continuous Deployment) practices for data, DataOps enables teams to make quick updates to data pipelines and push changes to production without delays. This is especially important in decentralized environments, where teams need the agility to iterate on their data products frequently.

DataOps includes practices like automated testing, monitoring, and validation, which ensure that data pipelines are delivering accurate, clean data. In decentralized architectures, this helps prevent the spread of low-quality or corrupted data across different domains.

In data mesh architectures, teams often need to share data products across domains. DataOps fosters collaboration between these teams by standardizing data workflows and providing visibility into data pipelines, making it easier to integrate and use data from other domains.

While DataOps brings clear benefits, it also introduces certain complexities when implemented in decentralized architectures like data mesh. The independence of domain teams means that maintaining consistent DataOps practices across the organization can be difficult, and the diversity of tools and technologies can complicate automation.

In decentralized teams, different domains always have unique data requirements and preferences for tools. One team may prefer Apache Airflow for orchestration, while another may use dbt (Data Build Tool) for data transformation. The result is a fragmented tool landscape that complicates cross-domain collaboration and increases the difficulty of building integrated DataOps workflows. Of course, there might be situations ‘in between’ where orchestration/running dbt via Airflow is the norm.

While automation is a key benefit of DataOps, it can be difficult to enforce consistent standards for data quality when each team is responsible for its own pipelines. Decentralized teams may follow different testing practices, and without clear guidelines, there is a risk that data quality issues in one domain could affect other domains downstream.

Imagine a finance team in a data mesh pushing corrupted or incomplete transaction data into their pipeline. Without standardized data validation rules across teams, this flawed data could end up being used by another team (such as sales or business intelligence), leading to inaccurate reports and poor decision-making. A tip here to always be on the safe side - before the final form of the data product is made available for other domains (i.e. before it lands in conformed/consumed zones), the general framework should force QA tests to be run. For us it's the "dbt build" command that builds the model and tests it.

In a decentralized architecture, maintaining data governance, security, and compliance is an ongoing challenge. DataOps requires a balance between giving teams the flexibility to manage their own data products and ensuring that sensitive data is protected and governed according to organizational policies. This can be particularly difficult when different teams operate with different tools, cloud environments, and levels of expertise.

A domain team handling customer data may implement its own security policies around data access and encryption, while another team dealing with less sensitive data might adopt a more relaxed approach. For the former, and that’s what we currently use in our mesh projects, data masking - e.g. the one from Snowflake - comes in handy. It allows protection of sensitive data from unauthorized access while allowing authorized users to access by specific tagging of particular models/tables/columns. Needless to say that lack of consistency can expose your organization to compliance risks and security vulnerabilities that ISO & SOC auditors will happily cling to. Better address in advance!

Although DataOps aims to reduce manual effort and increase automation, the setup and ongoing management of DataOps pipelines can introduce operational overhead, particularly for smaller teams. Maintaining automated testing, monitoring, and CI/CD pipelines for data requires continuous tuning and updates, which may not be feasible for every domain team, especially those with fewer resources. In a data mesh, a small team focused on developing a specific data product may find it challenging to set up complex DataOps pipelines without support from a central team. The time spent on managing infrastructure could detract from the team’s ability to focus on delivering value through their data product. Not to mention the interaction between DevOps and the data team, which could easily serve as a topic for a separate article…

To successfully implement DataOps in decentralized teams without overwhelming them with complexity, it’s essential to ride the thin balance line between standardization and autonomy. Here are some strategies to achieve that balance:

Rather than mandating a rigid set of tools and workflows for all teams, organizations should define centralized guardrails—policies and best practices that every team must follow, while still allowing them flexibility in how they implement DataOps. These guardrails can include rules around data validation, security, and governance, while leaving teams free to choose their own tools for orchestration, transformation, and analysis.

Example: An organization could standardize on a tool like Great Expectations for data validation and quality checks across all teams, but allow individual domains to choose their preferred orchestration tools (e.g., Airflow, Prefect, or Dagster) based on their specific needs.

To reduce operational overhead, encourage teams to develop reusable templates or modular data pipelines that can be easily shared across domains. This standardization allows teams to build on proven best practices while avoiding the need to reinvent the wheel every time they create a new pipeline.

Example: Tools like dbt make it easy to build modular, reusable workflows. Teams can create and share standardized SQL models for data transformation, making it easier to maintain consistency across domains while still allowing for flexibility in how transformations are customized.

Self-service platforms are a great way to empower domain teams to manage their own data products without the complexity of managing infrastructure. By providing pre-built tools and infrastructure, these platforms allow teams to focus on developing and deploying data products while ensuring that the underlying systems are reliable, secure, and compliant.

Example: A self-service platform like Terraform Cloud or AWS CloudFormation can be used to automate infrastructure provisioning for data pipelines. Teams can define their infrastructure as code (IaC) templates, which are automatically deployed and managed, reducing the complexity of infrastructure setup while maintaining control.

For more complex architectures serving more than just a few domain teams, we strongly advise implementing fully automated IaC with DSL integrated in CI/CD for resource provisioning, thus no need for custom code development should demands grow. For a deeper dive into DSL & why it makes sense to consider utilizing it, read our article When Terraform Turns Terraterror, where we cover a tad more details on the subject.

While teams should have autonomy over their data products, centralized observability and monitoring tools can help ensure that every domain’s data pipelines are functioning as expected. Implementing standardized monitoring tools allows teams to track performance, detect anomalies, and troubleshoot issues without creating blind spots across the organization.

Example: Tools like Datadog or Prometheus can be used to monitor key metrics for data pipelines, such as data latency, throughput, and error rates. Centralized monitoring provides visibility across domains, while still allowing individual teams to set up custom alerts and dashboards based on their specific needs. For the ultimate visibility, a unified master dashboard aggregating data from all the teams, thus building full observability for the whole organization within the Data Management Office is definitely worth investing in.

In decentralized architectures like data mesh, DataOps is essential for ensuring that data pipelines are efficient, reliable, and scalable. However, balancing the autonomy of individual domain teams with the need for consistency, governance, and collaboration can be tricky.

By establishing clear guardrails, encouraging reusable workflows, and leveraging self-service platforms, organizations can realize the benefits of DataOps without adding unnecessary complexity.

Tools like Apache Airflow, dbt, and Dagster provide the flexibility that decentralized teams need, while centralized monitoring and governance ensure that standards are maintained across the organization. When implemented thoughtfully, DataOps can drive efficiency and innovation in decentralized teams—without overwhelming them with operational overhead.

In the upcoming article in the data mesh series, we will focus specifically on tools that can help implement DataOps in decentralized teams. Stay tuned!